Why doesn’t the negatively charged electron in hydrogen atom fall onto the positively charged proton? Why do hydrogen atoms pair into molecules and why helium atoms don’t? And did you know that answer to the second of these questions is surprisingly close to the distinction between metals and insulators?

“Think how hard physics would be if particles could think.”

— Murray Gell-Mann

Let us quickly review what we learned about the laws of the quantum world in a previous post. The description is in contradiction with the common sense, yet it is the simplest one we found that has survived all experimental tests. The rules of the quantum game can be summarized in only a few principles to which the particles are fully obedient. As Murray Gell-Mann, a pioneer of the elementary particle physics, pointed out — particles do not think.

Having electrons in our mind, the rules are as follows:

(1) Electrons are better to be thought of as waves rather than point objects. The bigger the magnitude of the wave at a given point, the higher the probability that the electron will be found at that point after someone asks for (i.e. measures) its position. These waves propagate in space according to the so-called Schrödinger equation which is very similar to the wave equation — the one that describes the motion of waves on a guitar string or on a surface of a water pond. The most tangible experimental evidence for the wave-like character of electrons comes from the double slit experiment.

(2) The superposition principle says that if an electron can be in one state (let’s call it A) and in another state (let’s call this one B), then it might also exist in a superposition of those two states (something like A + B). Waves on a water pond also have this property, which means that there can simultaneously be several waves moving in various directions on the water surface, each of the waves ignoring all the other. But there is even more to it! The superposition principle means that a single electron can at the same time be in Hong Kong and in Rio de Janeiro — simply because it certainly can be either in Hong Kong or in Rio only. However, if someone measures its position, the electron will randomly choose to be in one of the cities.

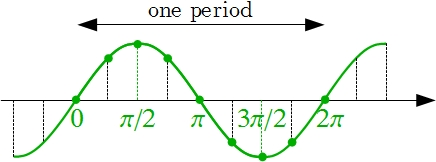

(3) How to deal with momentum in the quantum world? Let’s make an analogy with position. A typical electron wave extends in space, therefore the electron doesn’t have a well-defined position. There is, however, a special kind of waves that are very narrow in space. Such waves correspond to electrons with a well-defined position. Similarly, an electron possesses a “sharp” value of momentum only for certain special waves and a “smeared” value for all the other waves. The special waves that carry well-defined momentum are just the ordinary sine waves. The shorter the wavelength, the higher the momentum of the electron. The “average” value of momentum of a general electron wave can be estimated by the typical size of the ripples of the waves. The narrower they are, the bigger momentum is carried.

(4) We can make a similar statement about energy of an electron. A general electron wave does not have a well-defined energy. There are, however, some special waves that carry a “sharp” amount of energy. These are the standing waves and they convey all information about the physical properties of the studied system. For example, the standing electron waves in a hydrogen atom are labelled as 1s, 2s, 2p etc. and they are widely used to describe chemical properties of molecules.

(5) Besides behaving like waves that move in space, electrons also carry a property called spin. At our level of discussion the meaning of spin remains unclear. We just accept as a fact that the spin of an electron can have two opposite values. No more!

(6) The exclusion principle says that two electrons cannot simultaneously be in the same state. There can be at most two electrons wobbling as the same wave, e.g. as the 1s orbital of hydrogen atom, but then they are forced to have opposite values of spin.

These listed principles were discussed in the two previous posts. Now we will add one more: How to calculate the energy of an electron wave?

Consider as an example the hydrogen atom. It resembles a miniature solar system. The heavy proton attracts the lighter electron in a similar way as the heavy Sun attracts the lighter Earth. The mechanical energy of the Earth has two contributions. First, the kinetic energy proportional to the square of Earth’s orbital velocity. Since we can express velocity as the ratio of momentum and mass, we can equally well say that the kinetic energy is proportional to the square of Earth’s orbital momentum. Second, the potential energy in the gravitational field of the Sun. The closer the Earth happens to be to the Sun, the lower is its potential energy. And vice versa, energy is needed to move up in a gravitational field. Everyone who has ever hiked in mountains knows this. (By the way, the Earth really changes its distance from the Sun by about 3% during every orbit. This, however, doesn’t have to do with summer and winter. The Earth comes nearest to the Sun in January.)

One could find the energy of an electron in hydrogen atom in the same way if only electrons were point particles. But they are not. An electron is a wave extending all around the proton, so the distance (and hence also the potential energy) is not well defined. One more step in the calculation is necessary — we have to calculate potential energy at each point of the wave and average it. More precisely, we have to find a weighted average where the “weight” corresponds to the magnitude of the wave. It is like calculating the final grade from a math class in high school: The big tests have more impact on the final grade than the small ones. In the same way the parts of the wave with a large magnitude have more impact on the average potential energy.

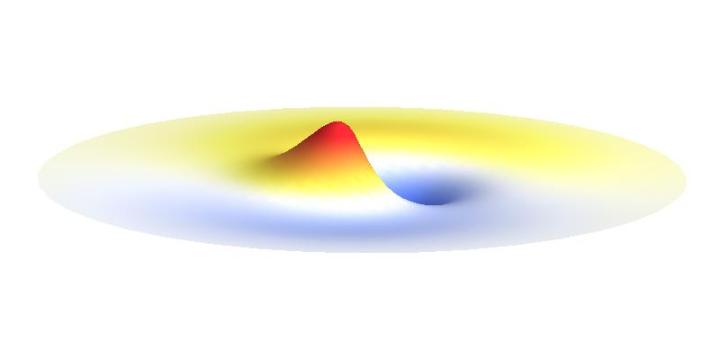

The kinetic energy in the quantum world remains to be proportional to the square of the momentum. Since (see point (3) above) momentum is in general not a sharp-valued quantity, neither is the kinetic energy. However, even if both the potential and the kinetic energy are not well-defined quantities, their sum can be! This happens if the local curvature of the wave (i.e. how wavy it is around a given point, this corresponds to momentum) at every distance is such that we get the same sum of potential and kinetic energy everywhere. Such waves can be found. They are the standing waves discussed above (in point (4)). To get a better impression consider the 1s orbital illustrated below. It has a large curvature (large kinetic energy) near the center, and it is relatively flat (small kinetic energy) on the outside.

Why doesn’t the electron in a hydrogen atom fall onto the proton? They are attracted by their opposite electric charge so the electron wave should try to get as close to the proton as possible to minimize the potential energy. However, such a narrow wave is very spiky, which means that it has a large momentum and therefore also a large kinetic energy. What is good for one is not good for the other. In its quest to minimize its energy the electron finds a delicate balance when it surrounds the proton in just the right way. Squeezing the electron into a smaller volume would increase the kinetic energy too much, while extending the electron wave to outer space would be too bad for the potential energy. The balance between the two is the 1s orbital pictured below.

Now we get to the interesting part. Why do hydrogen atoms pair into molecules? What happens when two hydrogen atoms are brought close to each other? This problem is not an easy one. In fact, physicists have to use delicate numerical techniques to precisely describe the formation of the bond. But it is easy to grasp the main reason why the atoms bind. They want to decrease the energy of the system. To understand what is going on we will first make a seemingly scary approximation: That the two electrons do not feel each other and the two protons also do not feel each other. The attraction between the electrons and protons are the only forces in such simple system. The effect of the repulsive interactions will be taken into account afterwards.

Consider a hydrogen atom with an electron in the lowest energy 1s orbital and another proton (without an electron) somewhere at a distance. The electron wave is concentrated around its proton but it also has a far-reaching tail. As the second proton gets closer to the atom, the electron begins to feel it and can literally “leak out” to it. But when the electron wave has already leaked out to the other proton, there is also a possibility to leak back to the original proton. The electron wave leaks back and forth in a complicated manner. Such “leaking wave” is around both protons at the same time, but it is not suitable for a good physical description. For that we need to find a standing wave.

We can get the standing wave with a trick — the superposition principle! What about an electron wave which is partly the 1s orbital of one proton and partly the 1s orbital of the other proton? Indeed, in such case the leaking from the first proton the other compensates the opposite process! This state, also called the bonding orbital, is illustrated in the following animation.

Notice that in this state the electron spends a lot of time between the two protons thus effectively decreasing its potential energy. The shape (or curvature) of the this wave is very similar to that of a single 1s orbital so the kinetic energy is not changed significantly. Therefore the total energy of the electron is lower than it was in a single atom.

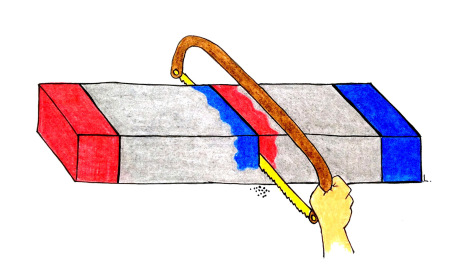

Note that there is also one more possibility to combine the two 1s waves into a standing wave — when they oscillate out of phase. However, in such case the magnitude of the wave between the two protons is suppressed and the electron does not decrease its potential energy effectively. This wave is called antibonding orbital, suggesting that it does not help in creating the bond between the hydrogen atoms.

If the atoms are relatively far from each other, the description given above is good enough. But when the atoms get too close to each other, nasty things begin to happen: The positively charged protons begin to feel each other and start to repel strongly. A balance has to be found when the protons are close enough for the electron to take advantage of the extra positive charge, and when they are still sufficiently far apart to keep their mutual potential energy low. Subtle calculation reveals that the optimal distance of the two protons is 53 picometres.

By now we considered two protons with only one electron, together forming an H2+ ion. However, the real hydrogen molecules are electrically neutral, i.e. they have two electrons. Here, the existence of spin comes in useful. Both of the electrons can lower their energy by going to the low energy bonding orbital provided that their spins are opposite. Simple, isn’t it?

Not quite. The repulsive interaction between the two electrons increases the repulsion between the two atoms. Subtle calculation reveals that the optimal distance between the two atoms in a neutral molecule is slightly larger (74 picometres) than in the ion. But there is still an energy gain in forming the molecule.

If you think about it, the conclusion is strange. We started with two neutral objects, each composed of an equally large positive and negative charge. How did it happen that the attractive force between them is stronger than the repulsion? Where did the asymmetry come in?

The answer lies in the wave-like character of the electrons. If an experimenter measures positions of the two electrons, he finds that the electrons avoid each other. If one is between the two protons, the other would be somewhere at a distance. Only very rarely would he find that the two electrons happen to be near each other. In this way the wave character of the electrons allows them to decrease their potential energy — something that the protons cannot do. The formation of the bond is an entirely quantum mechanical phenomenon and cannot be explained using classical analogues.

The results we have discussed are sometimes summarized using the following diagram. Here, the “height” of the states (the horizontal black lines) denotes their energy, i.e. high position means high energy and vice versa. The left and the right column represent the electron states in a single hydrogen atom, and the central column the states in a hydrogen molecule. Arrows represent electrons (with spin) occupying some of those states. Because both electrons have lower energy in the molecule than in a single atom, formation of the molecule is favorable.

Why don’t helium atoms helium atoms pair into molecules? One can draw an analogous diagram for helium. Because the bonding orbital is already occupied with both spins, the extra electrons have to go to the higher energy anti-bonding state. This is too costly, so helium atoms rather stay apart from each other.

The same kind of reasoning can be used to explain formation of other diatomic molecules like O2 and N2, and also of more complicated molecules. The technique we discussed is called linear combination of atomic orbitals (LCAO). Surprisingly, very similar reasoning explains why some crystals (like ice, kitchen salt or diamond) are insulating, while others (like aluminium, iron or indium tin oxide) conduct electricity. We will study this interesting question in the next post.

Let us consider an electron wave that has a well-defined z-component of angular momentum 2ħ. Such wave consists of two sine profiles curling around the center or, in our new jargon, to phase 2 x 2π = 4π. This is indicated by the green labels in the figure below. Now, if the wave also had a well-defined angular momentum around the x-axis, the wave would have to change its phase by some fixed amount if rotated by 180° about the x-axis. However, as indicated by the orange arrows, the wave changes phase by 0 somewhere, by π somewhere else and clearly by any other amount in between the two selected points. This is a contradiction: The x-component of angular momentum cannot be well-defined if the z-component is. The phase can increase uniformly around one axis only. Q.E.D.

Let us consider an electron wave that has a well-defined z-component of angular momentum 2ħ. Such wave consists of two sine profiles curling around the center or, in our new jargon, to phase 2 x 2π = 4π. This is indicated by the green labels in the figure below. Now, if the wave also had a well-defined angular momentum around the x-axis, the wave would have to change its phase by some fixed amount if rotated by 180° about the x-axis. However, as indicated by the orange arrows, the wave changes phase by 0 somewhere, by π somewhere else and clearly by any other amount in between the two selected points. This is a contradiction: The x-component of angular momentum cannot be well-defined if the z-component is. The phase can increase uniformly around one axis only. Q.E.D.